So recently, I found out something interesting, AI-generated music is everywhere. I mean, like, way more than I expected.

It all started when I was listening to some daily recommended song on a Chinese music app. One sone came on, and the vibe was… wired? After doing some digging on the singer’s page and the comment area, I found that— yep, it was AI made. And honestly I was kind of stunned. AI has officially invaded the music the music world. Not just helping artists as a tool, but literally making songs.

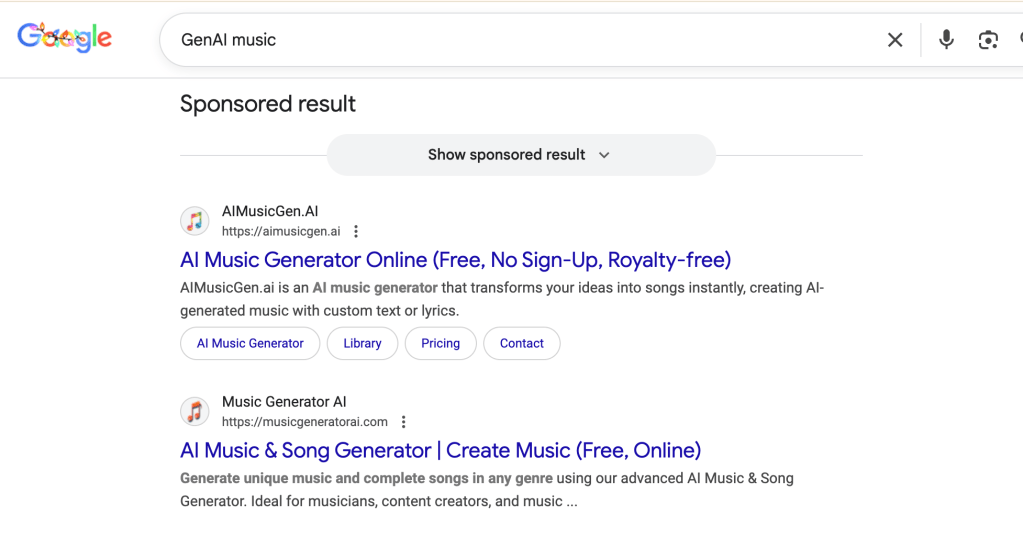

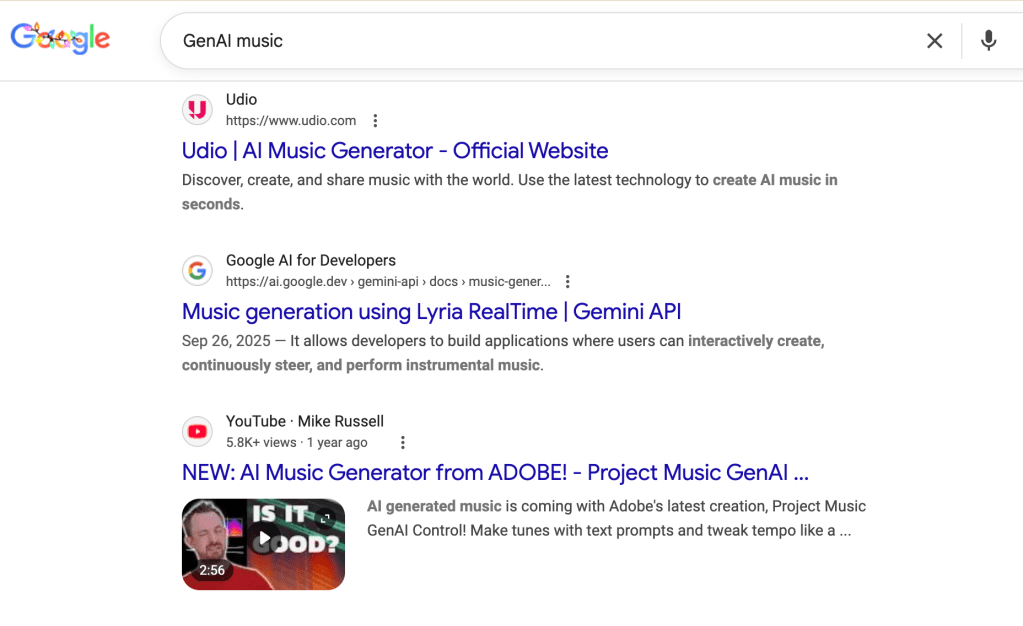

So I started to search “GenAI music” keyword. Turns out Adobe, Google’s Gemini, and a bunch of other platforms now let you generate music with a prompt.

But here’s the thing from y angle: music-making is basically emotional writing. Lyrics equals to feelings. Melody are memories. And personally, I don’t think an algorithm can replace that.

When digging deeper, I found a BBC article titled “How can you tell if your new favorite artist is a real person?” . It talks about some issues related to music industry raised by the AI tool. If AI can write, sing, mix, master — and drop new albums overnight, how are we supposed to. know what’s real? Should AI tracks be labeled? Do listeners deserve transparency?

I looked up whether tools exist to identify AI-generated songs, and I found one called:

The creator explains that the detector uses a Random Forest Classifier—basically a “yes/no” machine that analyzes things like spectral features, harmonics, pitch transitions, and all the nerdy audio stuff I definitely can’t explain. You just upload a track and it tells you how likely it is to be AI.

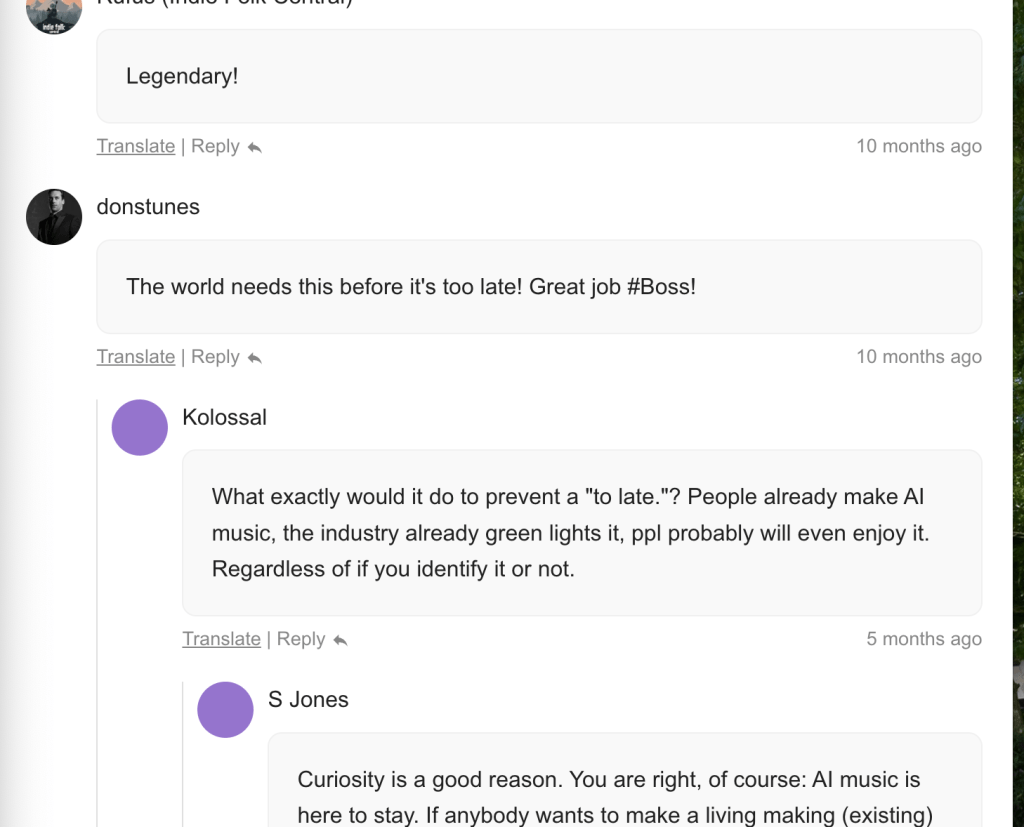

But what I really loved is that the comments section has turned into a mini-community. People are sharing results, comparing notes, arguing over what “sounds human,” and helping each other learn how to listen differently.

It’s basically an Affinity Space.

Just music lovers gathered around a shared curiosity:

What happens when the voice in your headphones might not belong to a person?

Honestly, I think spaces like this matter.

AI songs are this strange hybrid—part technology, part culture—and they make us rethink what a “voice” even means. For Gen Z, music isn’t just entertainment; it’s identity, expression, storytelling, therapy.

So when AI-generated songs flood the feed, something shifts.

Music suddenly feels reproducible, copy-pasteable—like templates instead of lived experience. And it makes human voices feel even more precious.

Maybe that’s why this affinity space exists. It shows a shared instinct among listeners: we want to know what’s real. We want to protect the texture of human emotion, the cracks in the voice, the imperfect lyrics, the stories behind the sound.

AI can sing—but it can’t feel.

And maybe that’s exactly why we’re learning how to listen differently.

Leave a comment